Shipa Cloud is how we run the Shipa control plane on behalf of users in order to give them the fastest path possible to implementing Application as Code within their clusters. You can try out Shipa Cloud for free today by going to shipa.io. Besides being the fastest way possible to get started with Shipa, it also takes away the responsibility of upgrades, maintenance, and uptime of the control plane for our users, but that responsibility doesn’t just disappear. That responsibility is taken on by the Site Reliability team at Shipa. Keep reading to get a glimpse at how Shipa maintains Shipa Cloud in order to deliver the best possible balance of functionality and availability.

Architectural Overview – 10,000 Foot View

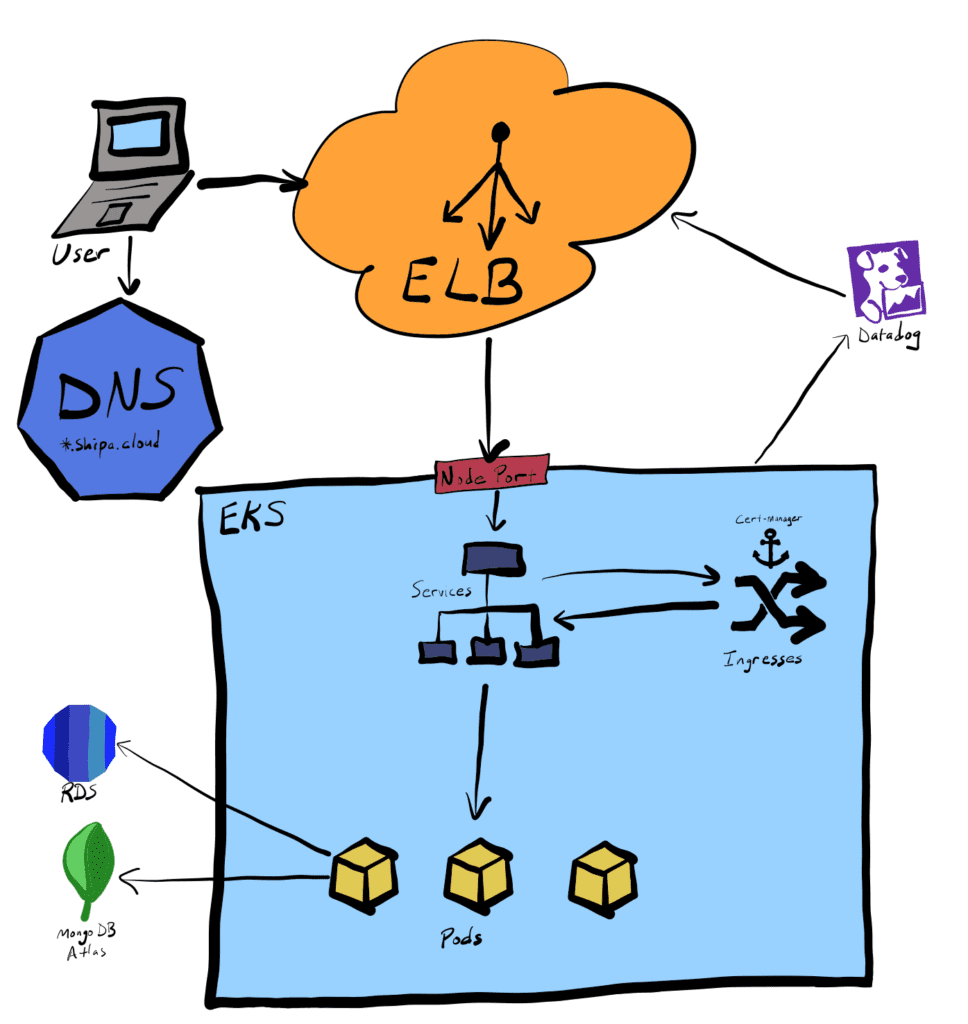

As the primary use case for Shipa is to help users manage applications running on Kubernetes, it only makes sense that we use Kubernetes ourselves. In fact, the same base Kubernetes resources that are used in the on-prem installation of Shipa are also present in Shipa Cloud. When you go to apps.shipa.cloud you are connecting to are dashboard-web deployment through our ingress controller, which is using the Shipa installed cert-manager deployment to retrieve a Let’s Encrypt certificate.

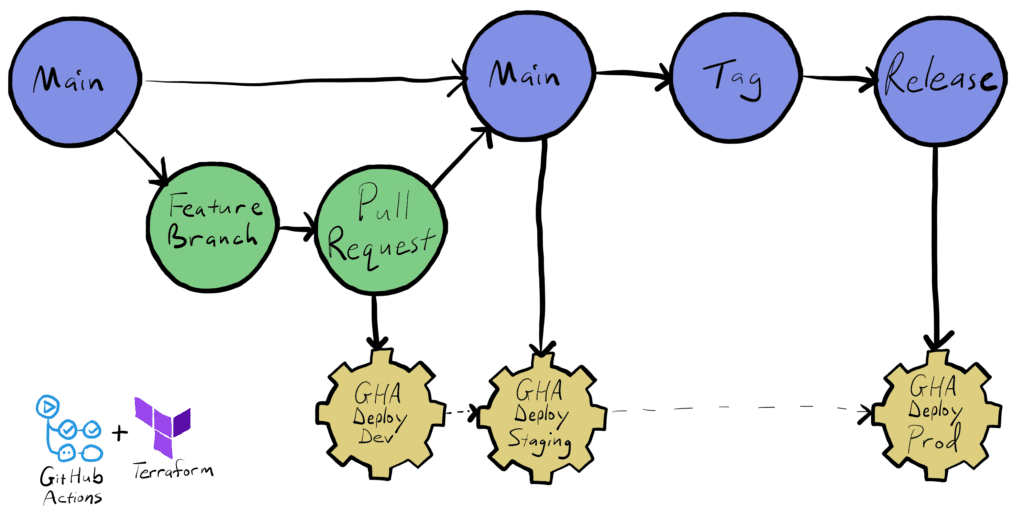

While the production instance of Shipa Cloud is the only environment that external users are given access to, this same architecture also exists as separate “dev” and “staging” environments.

External Services – SaaS built with SaaS

In order to offload a lot of the operational functions that don’t provide us a differentiating value, we leverage Software as a Service offering ourselves, in particular when it comes to persistence. Since we use EKS, we don’t need to worry about managing etcd during a Kubernetes upgrade. We also don’t want to worry about managing volumes for in cluster databases, so for our MongoDB and PostgreSQL instances, we use MongoDB Atlas and AWS RDS respectively. This allows managing upgrades, storage, backups, and performance through curated, managed experiences, that don’t put our cluster at risk.

Managing Change – Delivering Value and Reliability

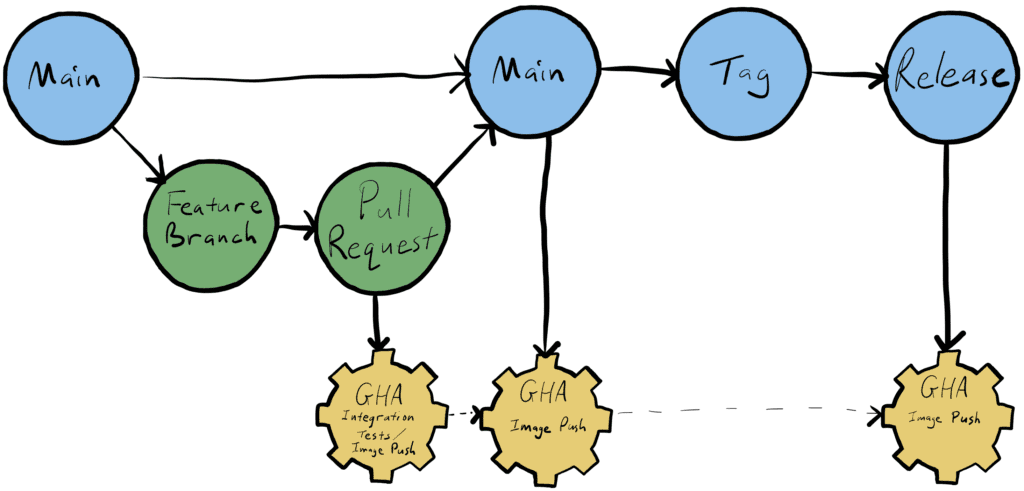

With a solid infrastructure in place and users happily utilizing Shipa Cloud, we need to be responsible for how we introduce changes. This starts with development practices and goes all the way through release and beyond. Within our development practices for the individual components of Shipa Cloud, we practice what could be described as trunk based development with feature branches. Work is tracked in Jira, tied to a feature branch and any commits, then a pull request is created, starting both internal discussion about the change and the automation to make the changes available.

When the pull request is created, GitHub Actions install Shipa into an “integration” Kubernetes cluster, where all the latest versions of the Shipa components are tested with the component being updated. The integration tests are in a separate repository, which allows them to be used from any of our other repos. With the integration tests having passed and at least one peer review, the pull request can be merged to the mainline, which will make the built container image available for deployment to one of our Shipa Cloud environments. After the image has been vetted a bit further in our dev and staging environments, a tag can be created from the mainline to represent a release, which will cause the container image to be tagged for general use.

The definitions of all the Kubernetes resources that are needed in Shipa Cloud are managed in a Helm Chart. Updates to the Helm Chart follow same type of flow as any other repository that we have, except that instead of a container image we end up with a .tgz file. Here again we use the tag/release process to manage the name of the artifact in order to allow us to test things out internally without confusing our users with constant changes.

With any Helm or image changes ready to go, next we leverage Terraform, triggered by GitHub Actions, to promote changes through the environments. Again we use trunk based development with feature branches, keeping all Terraform in a single repo, always working off the mainline. Rather than using different branches to represent different environments, we use different configuration files to represent the environmental differences. This approach makes it simpler to compare differences between environments, since we only have to look at file diffs, versus comparing branches.

Within Terraform we rely on the AWS provider to provision our infrastructure, then use the Helm provider to deploy our resources into the EKS cluster. With the control plane installed we still need to deploy some extra apps in order to make it “Shipa Cloud”, e.g. so we can accept payments. In order to do this we wanted a way to deploy applications as code, enforcing policy, while providing visibility, so we of course use Shipa! Within our Terraform code, we use the Shipa Terraform provider to put on the finishing touches.

We also use Launch Darkly for feature toggle, to allow us to deploy changes while making them only available to specific users. This allows us to test changes in production before rolling them out to everyone.

For monitoring, we have Datadog checking the health of Shipa Cloud and reporting to Slack and email if there are any degradations.

Networking – DNS, Security, and Load Balancing, oh my!

DNS registration can be difficult, and if you do it wrong it can take time, anywhere between minutes and hours, just to propagate changes across different mirrors. In fact, the default behavior for a Java app when a security manager is installed is to cache forever. To that end Shipa decided to make things easier on users and ourselves by running our own nameservers to resolve shipa.cloud addresses, which exist in a separate EKS cluster.

When you create a Shipa managed app it will get an automatic DNS name, which then allows ingress to be configured, and if the ingress has public access, cert-manager will use Let’s Encrypt to generate a certificate for your app. This is our preferred approach, but when Let’s Encrypt isn’t feasible, we can still load an AWS certificate into the ELB that is at the edge of our network. The ELB is automatically created when our ingress service is created in Kubernetes, since it is type LoadBalancer. The ELB balances traffic across the EKS workers, which send requests to the ingress controllers, which call the services, which hand the requests off to the pods. The layers of network abstraction can be complicated, but they allow for deployments to be updated with as little interruption of service as possible.

For some further reading on ingress and certificates check out the following:

Shipa, Your Partner in Your Own SaaS Journey

At Shipa, we take great pride in operating our SaaS to help those creating their own services and even their own SaaS to thrive. We certainly drink our own champagne at Shipa trying to leverage as much as of our platform to run our own platform. Make sure to check one of our upcoming Office Hours to learn more and sign up for Shipa Cloud, today!

-Brendon