TL;DR

Implementing an application-level API will enable you to do things like:

- Detach the application from the underlying infrastructure.

- Evolve and scale the Kubernetes infrastructure fast without impacting the developer experience and speed.

- Leverage your current development stack and enable you to experiment and use different technologies while maintaining the same application-level definitions.

- Reduce pipeline complexity and chaos

- Deliver value to development, security, and management teams fast

The Current State

The Kubernetes API is the front end of the Kubernetes control plane and is how users can interact with their clusters. In essence, it’s the interface used to manage, create, and configure the cluster and the state of objects.

Using a standard API, Kubernetes allowed teams to focus on constructs and consume infrastructure across different providers. Examples of that approach include the Container Network Interface (CNI), the Container Runtime Interface (CRI), and the Container Storage Interface (CSI).

These constructs encapsulated the know-how to interact with the underlying hardware and infrastructure resources. They enabled vendors and teams to rapidly build components and services on top of Kubernetes, creating an ever-growing and robust ecosystem of offerings.

Application Management Complexities

While the API features and possibilities above fuelled the adoption and ecosystem growth for Kubernetes, it also exposed infrastructure-level constructs that developers and operators have to use when deploying, managing, and securing applications.

Many essential functions and configurations required to manage applications on Kubernetes require significant time and understanding to set up and manage.

Critical components (like dashboard, monitoring, policies) are add-ons, and nearly all application-related features require teams to build custom integrations. This aspect alone can exponentially increase complexity for mid-to-large teams leveraging multiple clusters and a growing number of applications.

Sure, you may say that having complete control over all the available infrastructure-level APIs is what you need because your use-case is *unique*.

While some use-cases may indeed be unique, interviewing hundreds of users, it’s easy to identify that between 70 and 80% of their workloads are not that unique and require a standard set of services available for their applications to be deployed, managed, and secured successfully.

So as the ecosystem continues to evolve and adoption accelerates, the next point for Kubernetes to gain the hearts and minds of developers and platform operators is an application-level API.

How can you implement an application-level API that can speed up the adoption and management of that 70-80% of your workloads without getting in your way to tackle the unique 20-30% use-cases you have?

Implementing an Application API

You can find so many startups trying to “make Kubernetes” easier by giving you a friendly dashboard, or enabling you to consume Kubernetes namespaces cleverly, or similar solutions.

In the end, most of these solutions are still exposing infrastructure-level APIs and constructs that force you to face most of the challenges described above and make you build and maintain custom solutions on top of it to drive developer adoption and scalability.

That drove us to build a completely different approach.

We started designing an application-level API here at Shipa, and we had the following requirements in mind:

- Users will have multiple clusters, with different versions, and across different providers

- An application-level API should bring separation of concerns between developers and operators

- Operators should focus on governance, control, and scalability

- Developers should focus on deploying AND managing their applications post-deployment

- The application API should provide standard services both operators and developers need at deployment and post-deployment time. Services include monitoring, RBAC, audit trail, and more.

- Operators need to scale and change the underlying infrastructure, not impacting the developer experience when deploying and managing their applications.

- It should integrate into the existing workflow and tech stacks, such as IaC, CI/CD pipelines, and more.

- A common dashboard where developers and operators can find the relevant information to operate applications, policies, and governance.

We believe that delivering the requirements above enables you to do things like:

- Detach the application from the underlying infrastructure.

- Evolve and scale the Kubernetes infrastructure fast without impacting the developer experience and speed.

- Leverage your current development stack and enable you to experiment and use different technologies while maintaining the same application-level definitions.

- Reduce pipeline complexity and chaos

- Deliver value to development, security, and management teams fast

Application API Components

To address the requirements above, we have divided Shipa into two primary constructs:

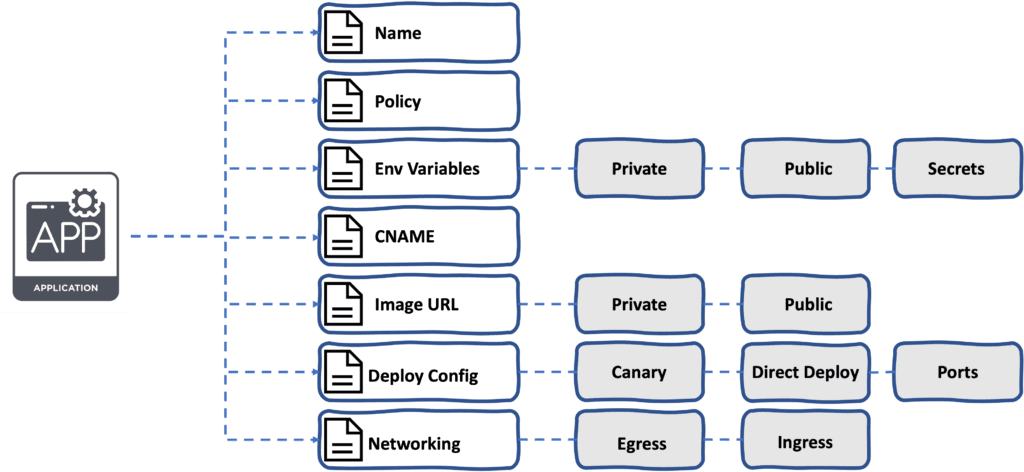

- Application: The application API definition that developers and the ecosystem tooling can use to deploy and manage applications.

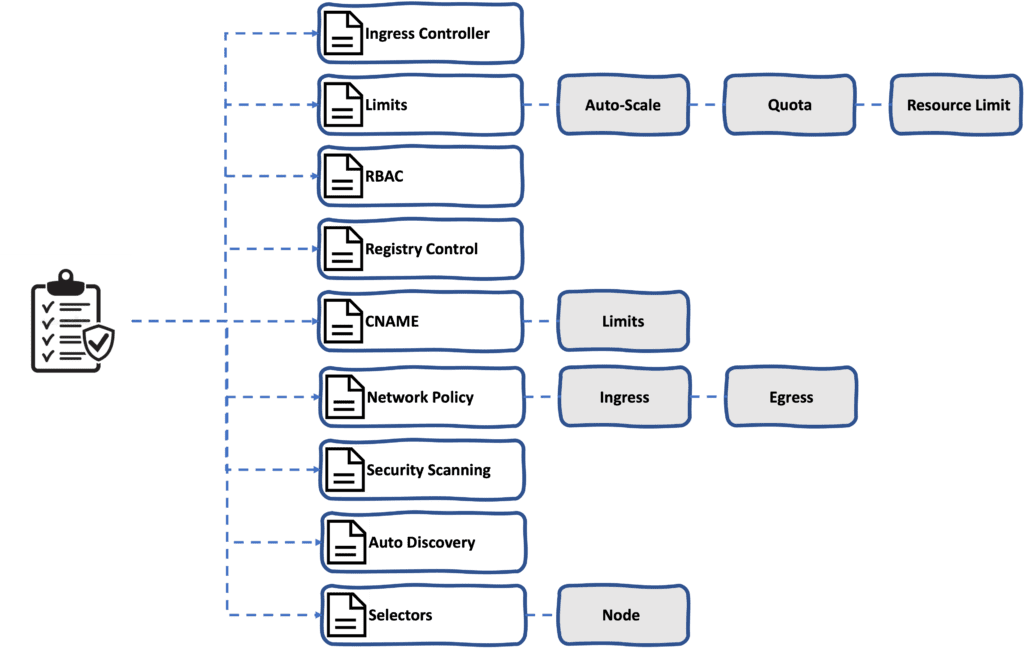

- Policies: A set of application-level policies that Operators can use to define automatically enforced controls as developers deploy applications.

The constructs above follow the same design principles from the Kubernetes API you can leverage across different clusters, with different versions and various providers. These constructs also implement a standard interface that different Infrastructure-as-Code (IaC) or pipeline tools can use.

Here is an example of the application construct definition:

And this is an example of the policy construct definition:

Consuming The APIs

Because these APIs use the same design principles as the Kubernetes API, you can use different tools in your stack to consume them.

One great example we can use is Pulumi. If you were to deploy a sample NGNIX deployment directly between Pulumi and Kubernetes, it would look like this:

const appLabels = { appClass: name };

const deployment = new k8s.apps.v1.Deployment(name,

{

metadata: {

namespace: namespaceName,

labels: appLabels,

},

spec: {

replicas: 1,

selector: { matchLabels: appLabels },

template: {

metadata: {

labels: appLabels,

},

spec: {

containers: [

{

name: name,

image: "nginx:latest",

ports: [{ name: "http", containerPort: 80 }],

},

],

},

},

},

},

{

provider: cluster.provider,

},

);

// Export the Deployment name

export const deploymentName = deployment.metadata.name;

// Create a LoadBalancer Service for the NGINX Deployment

const service = new k8s.core.v1.Service(name,

{

metadata: {

labels: appLabels,

namespace: namespaceName,

},

spec: {

type: "LoadBalancer",

ports: [{ port: 80, targetPort: "http" }],

selector: appLabels,

},

},

{

provider: cluster.provider,

},

);Now, if you were using Shipa’s API on top of Kubernetes and have Pulumi create the deployment through Shipa’s API, it would look like this instead:

const app = new shipa.App("app", {

app: {

name: "pulumi-app-1",

framework: "policy-fw-name",

teamowner: "shipa-team"

}

});

const appDeploy = new shipa.AppDeploy("app-deploy", {

app: "pulumi-app-1",

deploy: {

image: "docker.io/shipasoftware/bulletinboard:1.0",

port: 8080

}

});

export const appName = appDeploy.app;The second example shows an application definition that is easy to consume, manage, and extend by developers, which will help fuel adoption in your organization.

Conclusion

We believe an application-level API will enable you to deliver on the goals listed above and build on top of it.

The following posts will discuss the application and policies constructs in detail, how you can leverage them across different tools, and how to integrate them into your broader monitoring and incident management stack.

We will also discuss how consuming Shipa’s application-level API delivers monitoring and other required features as part of the API model.

Stay tuned!